This topic describes how to call the remote computing service in a client program and view the results on the web page.

7.4. C/S API calls¶

When you use the remote computing service of MindOpt, the execution procedure of optimization solving is divided into two parts: client modeling and solving by the compute server. The communication between the two parts is implemented by APIs. You can call standard MindOpt APIs, such as C/C++/Python APIs, to build an optimization model, set the algorithm parameters, and then submit a job to the server for solving. APIs in more languages will be supported in the future.

After the server receives the job, it returns a unique job ID ( job_id).

You can use this job ID to query and monitor the solving status of the optimization model, which can be Submitted, Solving, Failed, or Finished. Once the job ends (in the Finished or Failed state), the client can obtain the result from the server.

Two types of APIs are used to submit jobs and obtain results from the client.

APIs for submitting jobs: used to submit a job to the server and obtain a job ID returned by the server.

APIs for obtaining results: used to retrieve the solving status by using the job ID and download the computing result.

API |

C |

C++ |

Python |

For submitting jobs |

|

||

For obtaining results |

|

When you submit a job, you need to upload the serialized model and parameter files to the remote server for solving. When you obtain results, you also need to load the serialized model and parameter files. To meet requirements in different application scenarios, you can set two fetch methods for serialized files.

Method 1 : You can define the names of stored model files and parameter files.

For example, for the C programming language, model_file and param_file specify the names of the serialized model file and parameter file for storage, respectively. If the file name contains a path, you need to ensure that the path exists and you have the write permission on the path. Otherwise, an error is reported.

In the optimization program, specify the solver to write the two files to the specified positions.

Mdo_writeTask(model, model_file, MDO_YES, MDO_NO, MDO_NO);

Mdo_writeTask(model, param_file, MDO_NO, MDO_YES, MDO_NO);

Set related parameters to instruct the solver to upload the corresponding files to the remote server for solving.

Mdo_setStrParam(model, "Remote/File/Model", model_file);

Mdo_setStrParam(model, "Remote/File/Param", param_file);

If no model is available when you obtain the result, you need to execute the following command to load the serialized model and parameter files:

Mdo_readTask(model, model_file, MDO_YES, MDO_NO, MDO_NO);

Mdo_readTask(model, param_file, MDO_NO, MDO_YES, MDO_NO);

When you obtain the result, the computing result returned by the remote server needs to be stored. Therefore, you also need to specify a path for storing the serialized solution file. If the file name contains a path, ensure that the path exists and you have the write permission on the path. Otherwise, an error is reported. The "Remote/File/Model" and "Remote/File/Param" parameters need to be specified for reading serialized files.

const char * soln_file = "./my_soln.mdo";

Mdo_setStrParam(model, "Remote/File/Soln", soln_file);

Mdo_setStrParam(model, "Remote/File/Model", model_file);

Mdo_setStrParam(model, "Remote/File/Param", param_file);

Method 2: You only need to specify the path of the folder where the files are to be stored, such as filepath, and ensure that the path exists and you have the write permission on the path. Then, specify "Remote/File/Path" when setting parameters. The solver automatically writes the serialized files under the specified folder.

const char * filepath = "./tmp";

Mdo_setStrParam(model, "Remote/File/Path", filepath);

After you specify "Remote/File/Path" when you obtain the result, the solver fetches the serialized files from the corresponding path based on filepath and job_id and loads the files to the model and parameters. For example, if job_id is 10, the names of serialized model, parameter, and solution files are m10.mdo, p10.mdo, and s10.mdo, respectively.

All APIs are based on the C API. The C API constructs the internal data structures, calls the optimization algorithm in MindOpt, and generates related information about the solution. When you run MindOpt on your local computer, the C API constructs related data structures in the local memory. On the compute server, the C API of MindOpt inputs the received model and parameter data into the optimization algorithm and outputs solution data.

The following part describes how to call APIs to use the remote computing service in different client SDKs (C, C++, and Python).

7.5. Example: C program¶

The following part describes how to call the Mdo_submitTask and Mdo_retrieveTask APIs to submit a job and obtain the result in a C program.

7.5.1. Upload a model¶

7.5.1.1. Read the model from the MPS file and upload it¶

This part describes the process of reading the MPS file, setting algorithm parameters, serializing the model and parameters, and submitting a job. If the job is successfully submitted, the server returns a job_id, which can be used to query the job status.

Note

You need to provide necessary information such as the prepared MPS file, algorithm parameters, and server token (you need to obtain your own token).

1/**

2 * Description

3 * -----------

4 *

5 * Input model, specify parameters, serialize all the inputs, and then upload these data onto server.

6 */

7#include <stdio.h>

8#include "Mindopt.h"

9

10/* Macro to check the return code */

11#define MDO_CHECK_CALL(MDO_CALL) \

12 code = MDO_CALL; \

13 if (code != MDO_OKAY) \

14 { \

15 Mdo_explainResult(model, code, str); \

16 Mdo_freeMdl(&model); \

17 fprintf(stderr, "===================================\n"); \

18 fprintf(stderr, "Error : code <%d>\n", code); \

19 fprintf(stderr, "Reason : %s\n", str); \

20 fprintf(stderr, "===================================\n"); \

21 return (int)code; \

22 }

23

24int main(

25 int argc,

26 char * argv[])

27{

28 /* Variables. */

29 char str[1024] = { "\0" };

30 /* Input mps file (optimization problem) */

31 const char * input_file = "./afiro.mps";

32 /* Output serialized files */

33 const char * model_file = "./my_model.mdo";

34 const char * param_file = "./my_param.mdo";

35 MdoResult code = MDO_OKAY;

36 MdoMdl * model = NULL;

37 char job_id[1024]= { "\0" };

38

39 /*------------------------------------------------------------------*/

40 /* Step 1. Create a model and change the parameters. */

41 /*------------------------------------------------------------------*/

42 /* Create an empty model. */

43 MDO_CHECK_CALL(Mdo_createMdl(&model));

44

45 /*------------------------------------------------------------------*/

46 /* Step 2. Input model and parameters. */

47 /*------------------------------------------------------------------*/

48 /* Read model from file. */

49 MDO_CHECK_CALL(Mdo_readProb(model, input_file));

50

51 /* Input parameters. */

52 Mdo_setIntParam(model, "NumThreads", 4);

53 Mdo_setRealParam(model, "MaxTime", 3600);

54

55 /*------------------------------------------------------------------*/

56 /* Step 3. Serialize the model and the parameters. */

57 /*------------------------------------------------------------------*/

58 MDO_CHECK_CALL(Mdo_writeTask(model, model_file, MDO_YES, MDO_NO, MDO_NO));

59 MDO_CHECK_CALL(Mdo_writeTask(model, param_file, MDO_NO, MDO_YES, MDO_NO));

60

61 /*------------------------------------------------------------------*/

62 /* Step 4. Input parameters related to remote computing. */

63 /*------------------------------------------------------------------*/

64 /* Input parameters related to remote computing. */

65 Mdo_setStrParam(model, "Remote/Token", "xxxxxxxxxtokenxxxxxxxx"); // Change to your token

66 Mdo_setStrParam(model, "Remote/Desc", "my model");

67 Mdo_setStrParam(model, "Remote/Server", "127.0.0.1"); // Change to your server IP

68 Mdo_setStrParam(model, "Remote/File/Model", model_file);

69 Mdo_setStrParam(model, "Remote/File/Param", param_file);

70

71 /*------------------------------------------------------------------*/

72 /* Step 5. Upload serialize model and parameter to server, and */

73 /* then optimize the model. */

74 /*------------------------------------------------------------------*/

75 MDO_CHECK_CALL(Mdo_submitTask(model, job_id));

76 if (job_id[0] == '\0')

77 {

78 printf("ERROR: Invalid job ID: %s\n", job_id);

79 }

80 else

81 {

82 printf("Job was submitted to server successfully.\n");

83 printf("User may query the optimization result with the following job ID: %s\n", job_id);

84 }

85

86 /*------------------------------------------------------------------*/

87 /* Step 6. Free the model. */

88 /*------------------------------------------------------------------*/

89 /* Free the model. */

90 Mdo_freeMdl(&model);

91

92 return (int)code;

93}

7.5.1.2. Customize an optimization model¶

This part describes the process of customizing an optimization model, setting algorithm parameters, serializing the model and parameters, and submitting a job. If the job is successfully submitted, the server returns a job_id, which can be used to query the job status.

Note

You need to provide necessary information such as the prepared MPS file, algorithm parameters, and server token (you need to obtain your own token).

1/**

2 * Description

3 * -----------

4 *

5 * Input model, specify parameters, serialize all the inputs, and then upload these data onto server.

6 */

7#include <stdio.h>

8#include "Mindopt.h"

9

10/* Macro to check the return code */

11#define MDO_CHECK_CALL(MDO_CALL) \

12 code = MDO_CALL; \

13 if (code != MDO_OKAY) \

14 { \

15 Mdo_explainResult(model, code, str); \

16 Mdo_freeMdl(&model); \

17 fprintf(stderr, "===================================\n"); \

18 fprintf(stderr, "Error : code <%d>\n", code); \

19 fprintf(stderr, "Reason : %s\n", str); \

20 fprintf(stderr, "===================================\n"); \

21 return (int)code; \

22 }

23

24int main(

25 int argc,

26 char * argv[])

27{

28 /* Variables. */

29 char str[1024] = { "\0" };

30 const char * model_file = "./my_model.mdo";

31 const char * param_file = "./my_param.mdo";

32 MdoResult code = MDO_OKAY;

33 MdoMdl * model = NULL;

34 char job_id[1024]= { "\0" };

35

36 const int row1_idx[] = { 0, 1, 2, 3 };

37 const double row1_val[] = { 1.0, 1.0, 2.0, 3.0 };

38 const int row2_idx[] = { 0, 2, 3 };

39 const double row2_val[] = { 1.0, -1.0, 6.0 };

40

41 /*------------------------------------------------------------------*/

42 /* Step 1. Create a model and change the parameters. */

43 /*------------------------------------------------------------------*/

44 /* Create an empty model. */

45 MDO_CHECK_CALL(Mdo_createMdl(&model));

46

47 /*------------------------------------------------------------------*/

48 /* Step 2. Input model and parameters. */

49 /*------------------------------------------------------------------*/

50 /* Change to minimization problem. */

51 Mdo_setIntAttr(model, "MinSense", MDO_YES);

52

53 /* Add variables. */

54 MDO_CHECK_CALL(Mdo_addCol(model, 0.0, 10.0, 1.0, 0, NULL, NULL, "x0", MDO_NO));

55 MDO_CHECK_CALL(Mdo_addCol(model, 0.0, MDO_INFINITY, 1.0, 0, NULL, NULL, "x1", MDO_NO));

56 MDO_CHECK_CALL(Mdo_addCol(model, 0.0, MDO_INFINITY, 1.0, 0, NULL, NULL, "x2", MDO_NO));

57 MDO_CHECK_CALL(Mdo_addCol(model, 0.0, MDO_INFINITY, 1.0, 0, NULL, NULL, "x3", MDO_NO));

58

59 /* Add constraints.

60 * Note that the nonzero elements are inputted in a row-wise order here.

61 */

62 MDO_CHECK_CALL(Mdo_addRow(model, 1.0, MDO_INFINITY, 4, row1_idx, row1_val, "c0"));

63 MDO_CHECK_CALL(Mdo_addRow(model, 1.0, 1.0, 3, row2_idx, row2_val, "c1"));

64

65 /* Input parameters. */

66 Mdo_setIntParam(model, "NumThreads", 4);

67 Mdo_setRealParam(model, "MaxTime", 3600);

68

69 /*------------------------------------------------------------------*/

70 /* Step 3. Serialize the model and the parameters for later use. */

71 /*------------------------------------------------------------------*/

72 MDO_CHECK_CALL(Mdo_writeTask(model, model_file, MDO_YES, MDO_NO, MDO_NO));

73 MDO_CHECK_CALL(Mdo_writeTask(model, param_file, MDO_NO, MDO_YES, MDO_NO));

74

75 /*------------------------------------------------------------------*/

76 /* Step 4. Input parameters related to the remote computing server. */

77 /*------------------------------------------------------------------*/

78 /* Input parameters related to remote computing. */

79 Mdo_setStrParam(model, "Remote/Token", "xxxxxxxxxtokenxxxxxxxx"); // Change to your token

80 Mdo_setStrParam(model, "Remote/Desc", "my model");

81 Mdo_setStrParam(model, "Remote/Server", "127.0.0.1"); // Change to your server IP

82 Mdo_setStrParam(model, "Remote/File/Model", model_file);

83 Mdo_setStrParam(model, "Remote/File/Param", param_file);

84

85 /*------------------------------------------------------------------*/

86 /* Step 5. Upload the serialized model and parameters to server, and*/

87 /* then optimize the model. */

88 /*------------------------------------------------------------------*/

89 MDO_CHECK_CALL(Mdo_submitTask(model, job_id));

90 if (job_id[0] == '\0')

91 {

92 printf("ERROR: Invalid job ID: %s\n", job_id);

93 }

94 else

95 {

96 printf("Job was submitted to server successfully.\n");

97 printf("User may query the optimization result with the following job ID: %s\n", job_id);

98 }

99

100 /*------------------------------------------------------------------*/

101 /* Step 6. Free the model. */

102 /*------------------------------------------------------------------*/

103 /* Free the model. */

104 Mdo_freeMdl(&model);

105

106 return (int)code;

107}

7.5.2. Obtain the result¶

After you submit a computing job, you can use the following program to retrieve the computing result and download the solution to the problem. This segment of program shows the process of deserializing the model and parameters, querying the solving status, obtaining the solution, and outputting the result.

Note

You need to provide necessary information such as the server token and job ID.

1/**

2 * Description

3 * -----------

4 *

5 * Check the solution status, download the solution, and populate the result.

6 */

7#include <stdio.h>

8#if defined(__APPLE__) || defined(__linux__)

9# include <unistd.h>

10# define SLEEP_10_SEC sleep(10)

11#else

12# include <windows.h>

13# define SLEEP_10_SEC Sleep(10000)

14#endif

15#include "Mindopt.h"

16

17/* Macro to check the return code */

18#define MDO_CHECK_CALL(MDO_CALL) \

19 code = MDO_CALL; \

20 if (code != MDO_OKAY) \

21 { \

22 Mdo_explainResult(model, code, str); \

23 Mdo_freeMdl(&model); \

24 fprintf(stderr, "===================================\n"); \

25 fprintf(stderr, "Error : code <%d>\n", code); \

26 fprintf(stderr, "Reason : %s\n", str); \

27 fprintf(stderr, "===================================\n"); \

28 return (int)code; \

29 }

30

31int main(

32 int argc,

33 char * argv[])

34{

35 /* Variables. */

36 char str[1024] = { "\0" };

37 char status[1024] = { "\0" };

38 /* Serialized files, must be the same in the submission code */

39 const char * model_file = "./my_model.mdo";

40 const char * param_file = "./my_param.mdo";

41 /* Output solution file */

42 const char * soln_file = "./my_soln.mdo";

43 const char * sol_file = "./my_soln.sol";

44 MdoResult code = MDO_OKAY;

45 MdoStatus model_status = MDO_UNKNOWN;

46 char model_status_details[1024] = { "\0" };

47 MdoResult result = MDO_OKAY;

48 char result_details[1024] = { "\0" };

49 MdoBool has_soln = MDO_NO;

50 MdoMdl * model = NULL;

51 char job_id[1024] = { "\0" };

52 double val = 0.0;

53

54 /*------------------------------------------------------------------*/

55 /* Step 1. Create a model and change the parameters. */

56 /*------------------------------------------------------------------*/

57 /* Create an empty model. */

58 MDO_CHECK_CALL(Mdo_createMdl(&model));

59

60 /*------------------------------------------------------------------*/

61 /* Step 2. Read the serialized model and parameters -- this is */

62 /* required while populating the optimization result. */

63 /*------------------------------------------------------------------*/

64 MDO_CHECK_CALL(Mdo_readTask(model, model_file, MDO_YES, MDO_NO, MDO_NO));

65 MDO_CHECK_CALL(Mdo_readTask(model, param_file, MDO_NO, MDO_YES, MDO_NO));

66

67 /*------------------------------------------------------------------*/

68 /* Step 3. Input parameters related to the remote computing server. */

69 /*------------------------------------------------------------------*/

70 Mdo_setStrParam(model, "Remote/Token", "xxxxxxxxxtokenxxxxxxxx"); // Change to your token

71 Mdo_setStrParam(model, "Remote/Server", "127.0.0.1"); // Change to your server IP

72 Mdo_setStrParam(model, "Remote/File/Model", model_file);

73 Mdo_setStrParam(model, "Remote/File/Param", param_file);

74 Mdo_setStrParam(model, "Remote/File/Soln", soln_file);

75 strcpy(job_id, "1"); // Change to the jobID you received after submit the task

76

77 /*------------------------------------------------------------------*/

78 /* Step 4. Check the solution status periodically, and */

79 /* download the its upon availability. */

80 /*------------------------------------------------------------------*/

81 do

82 {

83 MDO_CHECK_CALL(Mdo_retrieveTask(model, job_id, status, &model_status, &result, &has_soln));

84

85 /* Sleep for 10 seconds. */

86 SLEEP_10_SEC;

87 }

88 while (status[0] == 'S'); /* Continue the loop if the status is in either Submitted status or Solving status. */

89

90 /*------------------------------------------------------------------*/

91 /* Step 5. Un-serialize the solution and then populate the result. */

92 /*------------------------------------------------------------------*/

93 printf("\nPopulating optimization results.\n");

94 Mdo_explainStatus(model, model_status, model_status_details);

95 Mdo_explainResult(model, result, result_details);

96

97 printf(" - Job status : %s\n", status);

98 printf(" - Model status : %s (%d)\n", model_status_details, model_status);

99 printf(" - Optimization status : %s (%d)\n", result_details, result);

100 printf(" - Solution availability : %s\n", has_soln ? "available" : "not available");

101 if (has_soln)

102 {

103 printf("\nPopulating solution.\n");

104 MDO_CHECK_CALL(Mdo_readTask(model, soln_file, MDO_NO, MDO_NO, MDO_YES));

105 Mdo_displayResults(model);

106 Mdo_writeSoln(model, sol_file);

107

108 Mdo_getRealAttr(model, "PrimalObjVal", &val);

109 printf(" - Primal objective value : %e\n", val);

110 Mdo_getRealAttr(model, "DualObjVal", &val);

111 printf(" - Dual objective value : %e\n", val);

112 Mdo_getRealAttr(model, "SolutionTime", &val);

113 printf(" - Solution time : %e sec.\n", val);

114 }

115

116 /*------------------------------------------------------------------*/

117 /* Step 6. Free the model. */

118 /*------------------------------------------------------------------*/

119 /* Free the model. */

120 Mdo_freeMdl(&model);

121

122 return (int)code;

123}

7.6. Example: C++ program¶

The following section describes how to call the submitTask and retrieveTask APIs to submit a job and obtain the result in a C++ program.

7.6.1. Upload a model¶

7.6.1.1. Read the model from the MPS file and upload it¶

This part describes the process of reading the MPS file, setting algorithm parameters, serializing the model and parameters, and submitting a job. If the job is successfully submitted, the server returns a job_id, which can be used to query the job status.

Note

You need to provide necessary information such as the prepared MPS file, algorithm parameters, and server token (you need to obtain your own token).

1/**

2 * Description

3 * -----------

4 *

5 * Input model, specify parameters, serialize all the inputs, and then upload these data onto server.

6 */

7#include <iostream>

8#include <vector>

9#include "MindoptCpp.h"

10

11using namespace mindopt;

12

13int main(

14 int argc,

15 char * argv[])

16{

17 /* Variables. */

18 /* Input mps file (optimization problem) */

19 std::string input_file("./afiro.mps");

20 /* Output serialized files */

21 std::string model_file("./my_model.mdo");

22 std::string param_file("./my_param.mdo");

23 std::string job_id;

24

25 /*------------------------------------------------------------------*/

26 /* Step 1. Create a model and change the parameters. */

27 /*------------------------------------------------------------------*/

28 /* Create an empty model. */

29 MdoModel model;

30 /* Read model from mps file */

31 model.readProb(input_file);

32

33

34 try

35 {

36 /*------------------------------------------------------------------*/

37 /* Step 2. Input model and parameters. */

38 /*------------------------------------------------------------------*/

39 /* Input parameters. */

40 model.setIntParam("NumThreads", 4);

41 model.setRealParam("MaxTime", 3600);

42

43 /*------------------------------------------------------------------*/

44 /* Step 3. Serialize the model and the parameters. */

45 /*------------------------------------------------------------------*/

46 model.writeTask(model_file, MDO_YES, MDO_NO, MDO_NO);

47 model.writeTask(param_file, MDO_NO, MDO_YES, MDO_NO);

48

49 /*------------------------------------------------------------------*/

50 /* Step 4. Input parameters related to remote computing. */

51 /*------------------------------------------------------------------*/

52 /* Input parameters related to remote computing. */

53 model.setStrParam("Remote/Token", "xxxxxxxxxtokenxxxxxxxx"); // Change to your token

54 model.setStrParam("Remote/Desc", "my model");

55 model.setStrParam("Remote/Server", "127.0.0.1"); // Change to your server IP

56 model.setStrParam("Remote/File/Model", model_file);

57 model.setStrParam("Remote/File/Param", param_file);

58

59 /*------------------------------------------------------------------*/

60 /* Step 5. Upload serialize model and parameter to server, and */

61 /* then optimize the model. */

62 /*------------------------------------------------------------------*/

63 job_id = model.submitTask();

64 if (job_id == "")

65 {

66 std::cout << "ERROR: Empty job ID." << std::endl;

67 }

68 else

69 {

70 std::cout << "Job was submitted to server successfully." << std::endl;

71 std::cout << "User may query the optimization result with the following job ID: " << job_id << std::endl;

72 }

73 }

74 catch (MdoException & e)

75 {

76 std::cerr << "===================================" << std::endl;

77 std::cerr << "Error : code <" << e.getResult() << ">" << std::endl;

78 std::cerr << "Reason : " << model.explainResult(e.getResult()) << std::endl;

79 std::cerr << "===================================" << std::endl;

80

81 return static_cast<int>(e.getResult());

82 }

83

84 return static_cast<int>(MDO_OKAY);

85}

7.6.1.2. Customize an optimization model¶

This part describes the process of customizing an optimization model, setting algorithm parameters, serializing the model and parameters, and submitting a job. If the job is successfully submitted, the server returns a job_id, which can be used to query the job status.

Note

You need to provide necessary information such as the prepared MPS file, algorithm parameters, and server token (you need to obtain your own token).

1/**

2 * Description

3 * -----------

4 *

5 * Input model, specify parameters, serialize all the inputs, and then upload these data onto server.

6 */

7#include <iostream>

8#include <vector>

9#include "MindoptCpp.h"

10

11using namespace mindopt;

12

13int main(

14 int argc,

15 char * argv[])

16{

17 /* Variables. */

18 std::string model_file("./my_model.mdo");

19 std::string param_file("./my_param.mdo");

20 std::string job_id;

21

22 /*------------------------------------------------------------------*/

23 /* Step 1. Create a model and change the parameters. */

24 /*------------------------------------------------------------------*/

25 /* Create an empty model. */

26 MdoModel model;

27

28 try

29 {

30 /*------------------------------------------------------------------*/

31 /* Step 2. Input model and parameters. */

32 /*------------------------------------------------------------------*/

33 /* Change to minimization problem. */

34 model.setIntAttr("MinSense", MDO_YES);

35

36 /* Add variables. */

37 std::vector<MdoVar> x;

38 x.push_back(model.addVar(0.0, 10.0, 1.0, "x0", MDO_NO));

39 x.push_back(model.addVar(0.0, MDO_INFINITY, 1.0, "x1", MDO_NO));

40 x.push_back(model.addVar(0.0, MDO_INFINITY, 1.0, "x2", MDO_NO));

41 x.push_back(model.addVar(0.0, MDO_INFINITY, 1.0, "x3", MDO_NO));

42

43 /* Add constraints. */

44 model.addCons(1.0, MDO_INFINITY, 1.0 * x[0] + 1.0 * x[1] + 2.0 * x[2] + 3.0 * x[3], "c0");

45 model.addCons(1.0, 1.0, 1.0 * x[0] - 1.0 * x[2] + 6.0 * x[3], "c1");

46

47 /* Input parameters. */

48 model.setIntParam("NumThreads", 4);

49 model.setRealParam("MaxTime", 3600);

50

51 /*------------------------------------------------------------------*/

52 /* Step 3. Serialize the model and the parameters for later use. */

53 /*------------------------------------------------------------------*/

54 model.writeTask(model_file, MDO_YES, MDO_NO, MDO_NO);

55 model.writeTask(param_file, MDO_NO, MDO_YES, MDO_NO);

56

57 /*------------------------------------------------------------------*/

58 /* Step 4. Input parameters related to the remote computing server. */

59 /*------------------------------------------------------------------*/

60 /* Input parameters related to remote computing. */

61 model.setStrParam("Remote/Token", "xxxxxxxxxtokenxxxxxxxx"); // Change to your token

62 model.setStrParam("Remote/Desc", "my model");

63 model.setStrParam("Remote/Server", "127.0.0.1"); // Change to your server IP

64 model.setStrParam("Remote/File/Model", model_file);

65 model.setStrParam("Remote/File/Param", param_file);

66

67 /*------------------------------------------------------------------*/

68 /* Step 5. Upload the serialized model and parameters to server, and*/

69 /* then optimize the model. */

70 /*------------------------------------------------------------------*/

71 job_id = model.submitTask();

72 if (job_id == "")

73 {

74 std::cout << "ERROR: Empty job ID." << std::endl;

75 }

76 else

77 {

78 std::cout << "Job was submitted to server successfully." << std::endl;

79 std::cout << "User may query the optimization result with the following job ID: " << job_id << std::endl;

80 }

81 }

82 catch (MdoException & e)

83 {

84 std::cerr << "===================================" << std::endl;

85 std::cerr << "Error : code <" << e.getResult() << ">" << std::endl;

86 std::cerr << "Reason : " << model.explainResult(e.getResult()) << std::endl;

87 std::cerr << "===================================" << std::endl;

88

89 return static_cast<int>(e.getResult());

90 }

91

92 return static_cast<int>(MDO_OKAY);

93}

7.6.2. Obtain the result¶

After you submit a computing job, you can use the following program to retrieve the computing result and download the solution to the problem. This segment of program shows the process of deserializing the model and parameters, querying the solving status, obtaining the solution, and outputting the result.

Note

You need to provide necessary information such as the server token and job ID.

1/**

2 * Description

3 * -----------

4 *

5 * Check the solution status, download the solution, and populate the result.

6 */

7#include <iostream>

8#include <vector>

9#include <stdio.h>

10#if defined(__APPLE__) || defined(__linux__)

11# include <unistd.h>

12# define SLEEP_10_SEC sleep(10)

13#else

14# include <windows.h>

15# define SLEEP_10_SEC Sleep(10000)

16#endif

17#include "MindoptCpp.h"

18

19using namespace mindopt;

20

21int main(

22 int argc,

23 char * argv[])

24{

25 /* Variables. */

26 /* Serialized files, must be the same in the submission code */

27 std::string model_file("./my_model.mdo");

28 std::string param_file("./my_param.mdo");

29 /* Output solution file */

30 std::string soln_file("./my_soln.mdo");

31 std::string sol_file("./my_soln.sol");

32 MdoStatus model_status = MDO_UNKNOWN;

33 std::string model_status_details;

34 std::string status;

35 MdoResult result = MDO_OKAY;

36 std::string result_details;

37 MdoBool has_soln = MDO_NO;

38 std::string job_id;

39 double val = 0.0;

40

41 /*------------------------------------------------------------------*/

42 /* Step 1. Create a model and change the parameters. */

43 /*------------------------------------------------------------------*/

44 /* Create an empty model. */

45 MdoModel model;

46

47 try

48 {

49 /*------------------------------------------------------------------*/

50 /* Step 2. Read the serialized model and parameters -- this is */

51 /* required while populating the optimization result. */

52 /*------------------------------------------------------------------*/

53 model.readTask(model_file, MDO_YES, MDO_NO, MDO_NO);

54 model.readTask(param_file, MDO_NO, MDO_YES, MDO_NO);

55

56 /*------------------------------------------------------------------*/

57 /* Step 3. Input parameters related to the remote computing server. */

58 /*------------------------------------------------------------------*/

59 model.setStrParam("Remote/Token", "xxxxxxxxxtokenxxxxxxxx"); // Change to your token

60 model.setStrParam("Remote/Server", "127.0.0.1"); // Change to your server IP

61 model.setStrParam("Remote/File/Model", model_file);

62 model.setStrParam("Remote/File/Param", param_file);

63 model.setStrParam("Remote/File/Soln", soln_file);

64 job_id = "1"; // Change to the jobID you received after submit the task

65

66 /*------------------------------------------------------------------*/

67 /* Step 4. Check the solution status periodically, and */

68 /* download the its upon availability. */

69 /*------------------------------------------------------------------*/

70 do

71 {

72 status = model.retrieveTask(job_id, model_status, result, has_soln);

73 /* Sleep for 10 seconds. */

74 SLEEP_10_SEC;

75 }

76 while (status[0] == 'S'); /* Continue the loop if the status is in either Submitted status or Solving status. */

77

78 /*------------------------------------------------------------------*/

79 /* Step 5. Un-serialize the solution and then populate the result. */

80 /*------------------------------------------------------------------*/

81 std::cout << std::endl << "Populating optimization results." << std::endl;

82 model_status_details = model.explainStatus(model_status);

83 result_details = model.explainResult(result);

84

85 std::cout << " - Job status : " << status << std::endl;

86 std::cout << " - Model status : " << model_status_details << " (" << model_status << ")" << std::endl;

87 std::cout << " - Optimization status : " << result_details << " (" << result << ")" << std::endl;

88 std::cout << " - Solution availability : " << std::string(has_soln ? "available" : "not available") << std::endl;

89 if (has_soln)

90 {

91 std::cout << "Populating solution." << std::endl;

92 model.readTask(soln_file, MDO_NO, MDO_NO, MDO_YES);

93 model.displayResults();

94 model.writeSoln(sol_file);

95

96 val = model.getRealAttr("PrimalObjVal");

97 std::cout << " - Primal objective value : " << val << std::endl;

98 val = model.getRealAttr("DualObjVal");

99 std::cout << " - Dual objective value : " << val << std::endl;

100 val = model.getRealAttr("SolutionTime");

101 std::cout << " - Solution time : " << val << std::endl;

102 }

103 }

104 catch (MdoException & e)

105 {

106 std::cerr << "===================================" << std::endl;

107 std::cerr << "Error : code <" << e.getResult() << ">" << std::endl;

108 std::cerr << "Reason : " << model.explainResult(e.getResult()) << std::endl;

109 std::cerr << "===================================" << std::endl;

110

111 return static_cast<int>(e.getResult());

112 }

113

114 return static_cast<int>(MDO_OKAY);

115}

7.7. Example: Python program¶

The following part describes how to call the submit_task and retrieve_task APIs to submit a job and obtain the result in a Python program.

7.7.1. Upload a model¶

7.7.1.1. Read the model from the MPS file and upload it¶

This part describes the process of reading the MPS file, setting algorithm parameters, serializing the model and parameters, and submitting a job. If the job is successfully submitted, the server returns a job_id, which can be used to query the job status.

Note

You need to provide necessary information such as the prepared MPS file, algorithm parameters, and server token (you need to obtain your own token).

1"""

2/**

3 * Description

4 * -----------

5 *

6 * Input model, specify parameters, serialize all the inputs, and then upload these data onto server.

7 */

8"""

9from mindoptpy import *

10

11

12if __name__ == "__main__":

13

14 # Input mps file (optimization problem)

15 input_file = "./afiro.mps"

16 # Output serialized files

17 model_file = "./my_model.mdo"

18 param_file = "./my_param.mdo"

19 job_id = ""

20

21 # Step 1. Create a model and change the parameters.

22 model = MdoModel()

23 model.read_prob(input_file)

24

25 try:

26 # Step 2. Input model.

27 model.set_int_param("NumThreads", 4)

28 model.set_real_param("MaxTime", 3600.0)

29

30 # Step 3. Serialize the model and the parameters.

31 model.write_task(model_file, True, False, False)

32 model.write_task(param_file, False, True, False)

33

34 # Step 4. Input parameters related to remote computing.

35 model.set_str_param("Remote/Token", "xxxxxxxxxtokenxxxxxxxx") # Change to your token

36 model.set_str_param("Remote/Desc", "afiro model")

37 model.set_str_param("Remote/Server", "127.0.0.1") # Change to your server IP

38 model.set_str_param("Remote/File/Model", model_file)

39 model.set_str_param("Remote/File/Param", param_file)

40

41 # Step 5. Upload serialize model and parameter to server, and then optimize the model.

42 job_id = model.submit_task()

43 if job_id == "":

44 print("ERROR: Empty job ID.")

45 else:

46 print("Job was submitted to server successfully.")

47 print("User may query the optimization result with the following job ID: {}".format(job_id))

48

49 except MdoError as e:

50 print("Received Mindopt exception.")

51 print(" - Code : {}".format(e.code))

52 print(" - Reason : {}".format(e.message))

53 except Exception as e:

54 print("Received exception.")

55 print(" - Reason : {}".format(e))

56 finally:

57 # Step 6. Free the model.

58 model.free_mdl()

7.7.1.2. Customize an optimization model¶

This part describes the process of customizing an optimization model, setting algorithm parameters, serializing the model and parameters, and submitting a job. If the job is successfully submitted, the server returns a job_id, which can be used to query the job status.

Note

You need to provide necessary information such as the prepared MPS file, algorithm parameters, and server token (you need to obtain your own token).

1"""

2/**

3 * Description

4 * -----------

5 *

6 * Input model, specify parameters, serialize all the inputs, and then upload these data onto server.

7 */

8"""

9from mindoptpy import *

10

11

12if __name__ == "__main__":

13

14 model_file = "./my_model.mdo"

15 param_file = "./my_param.mdo"

16 job_id = ""

17 MDO_INFINITY = MdoModel.get_infinity()

18

19 # Step 1. Create a model and change the parameters.

20 model = MdoModel()

21

22 try:

23 # Step 2. Input model.

24 # Change to minimization problem.

25 model.set_int_attr("MinSense", 1)

26

27 # Add variables.

28 x = []

29 x.append(model.add_var(0.0, 10.0, 1.0, None, "x0", False))

30 x.append(model.add_var(0.0, MDO_INFINITY, 1.0, None, "x1", False))

31 x.append(model.add_var(0.0, MDO_INFINITY, 1.0, None, "x2", False))

32 x.append(model.add_var(0.0, MDO_INFINITY, 1.0, None, "x3", False))

33

34 # Add constraints.

35 # Note that the nonzero elements are inputted in a row-wise order here.

36 model.add_cons(1.0, MDO_INFINITY, 1.0 * x[0] + 1.0 * x[1] + 2.0 * x[2] + 3.0 * x[3], "c0")

37 model.add_cons(1.0, 1.0, 1.0 * x[0] - 1.0 * x[2] + 6.0 * x[3], "c1")

38

39 # Specify parameters.

40 model.set_int_param("NumThreads", 4)

41 model.set_real_param("MaxTime", 3600.0)

42

43 # Step 3. Serialize the model and the parameters for later use.

44 model.write_task(model_file, True, False, False)

45 model.write_task(param_file, False, True, False)

46

47 # Step 4. Input parameters related to the remote computing server.

48 model.set_str_param("Remote/Token", "xxxxxxxxxtokenxxxxxxxx") # Change to your token

49 model.set_str_param("Remote/Desc", "my model")

50 model.set_str_param("Remote/Server", "127.0.0.1") # Change to your server IP

51 model.set_str_param("Remote/File/Model", model_file)

52 model.set_str_param("Remote/File/Param", param_file)

53

54 # Step 5. Upload the serialized model and parameters to server, and then optimize the model.

55 job_id = model.submit_task()

56 if job_id == "":

57 print("ERROR: Empty job ID.")

58 else:

59 print("Job was submitted to server successfully.")

60 print("User may query the optimization result with the following job ID: {}".format(job_id))

61

62 except MdoError as e:

63 print("Received Mindopt exception.")

64 print(" - Code : {}".format(e.code))

65 print(" - Reason : {}".format(e.message))

66 except Exception as e:

67 print("Received exception.")

68 print(" - Reason : {}".format(e))

69 finally:

70 # Step 6. Free the model.

71 model.free_mdl()

7.7.2. Obtain the result¶

After you submit a computing job, you can use the following program to retrieve the computing result and download the solution to the problem. This segment of program shows the process of deserializing the model and parameters, querying the solving status, obtaining the solution, and outputting the result.

Note

You need to provide necessary information such as the server token and job ID.

1"""

2/**

3 * Description

4 * -----------

5 *

6 * Check the solution status, download the solution, and populate the result.

7 */

8"""

9from mindoptpy import *

10import time

11

12

13if __name__ == "__main__":

14

15 # Serialized files, must be the same in the submission code

16 model_file = "./my_model.mdo"

17 param_file = "./my_param.mdo"

18 # Output solution file

19 soln_file = "./my_soln.mdo"

20 sol_file = "./my_soln.sol"

21 job_id = "" # Change to the jobID you received after submit the task

22 val = 0.0

23 status = "Submitted"

24 MDO_INFINITY = MdoModel.get_infinity()

25

26 # Step 1. Create a model and change the parameters.

27 model = MdoModel()

28

29 try:

30 # Step 2. Read the serialized model and parameters -- this is

31 # required while populating the optimization result.

32 model.read_task(model_file, True, False, False)

33 model.read_task(param_file, False, True, False)

34

35 # Step 3. Input parameters related to the remote computing server.

36 model.set_str_param("Remote/Token", "xxxxxxxxxtokenxxxxxxxx") # Change to your token

37 model.set_str_param("Remote/Server", "127.0.0.1") # Change to your server IP

38 model.set_str_param("Remote/File/Model", model_file)

39 model.set_str_param("Remote/File/Param", param_file)

40 model.set_str_param("Remote/File/Soln", soln_file)

41

42 # Step 4. Check the solution status periodically, and

43 # download the its upon availability.

44 while status == 'Submitted' or status == 'Solving':

45 status, model_status, result, has_soln = model.retrieve_task(job_id)

46

47 # Sleep for 10 seconds.

48 time.sleep(10)

49

50 model_status_details = model.explain_status(model_status)

51 result_details = model.explain_result(result)

52

53 print(" - Job status : {}".format(status))

54 print(" - Model status : {0} ({1})".format(model_status_details, model_status))

55 print(" - Optimization status : {0} ({1})".format(result_details, result))

56 print(" - Solution availability : {0}".format("available" if has_soln else "not available"))

57

58 if has_soln:

59 print("\nPopulating solution.")

60

61 model.read_task(soln_file, False, False, True)

62 model.display_results()

63 model.write_soln(sol_file)

64

65 print(" - Primal objective value : {}".format(model.get_real_attr("PrimalObjVal")))

66 print(" - Dual objective value : {}".format(model.get_real_attr("DualObjVal")))

67 print(" - Solution time : {} sec.".format(model.get_real_attr("SolutionTime")))

68

69 except MdoError as e:

70 print("Received Mindopt exception.")

71 print(" - Code : {}".format(e.code))

72 print(" - Reason : {}".format(e.message))

73 except Exception as e:

74 print("Received exception.")

75 print(" - Reason : {}".format(e))

76 finally:

77 # Step 5. Free the model.

78 model.free_mdl()

7.8. Modeling in a client program¶

The modeling method on a client is the same as that in the standalone solver. For more information, see Modeling and Optimization. On the Tianchi platform of Alibaba Cloud, the remote computing service of MindOpt optimization solver is provided for free. To use the service, you only need to perform simple online installation after registration. More examples and programs about how to use the remote computing service are provided.

7.9. Operations on the web page of the server¶

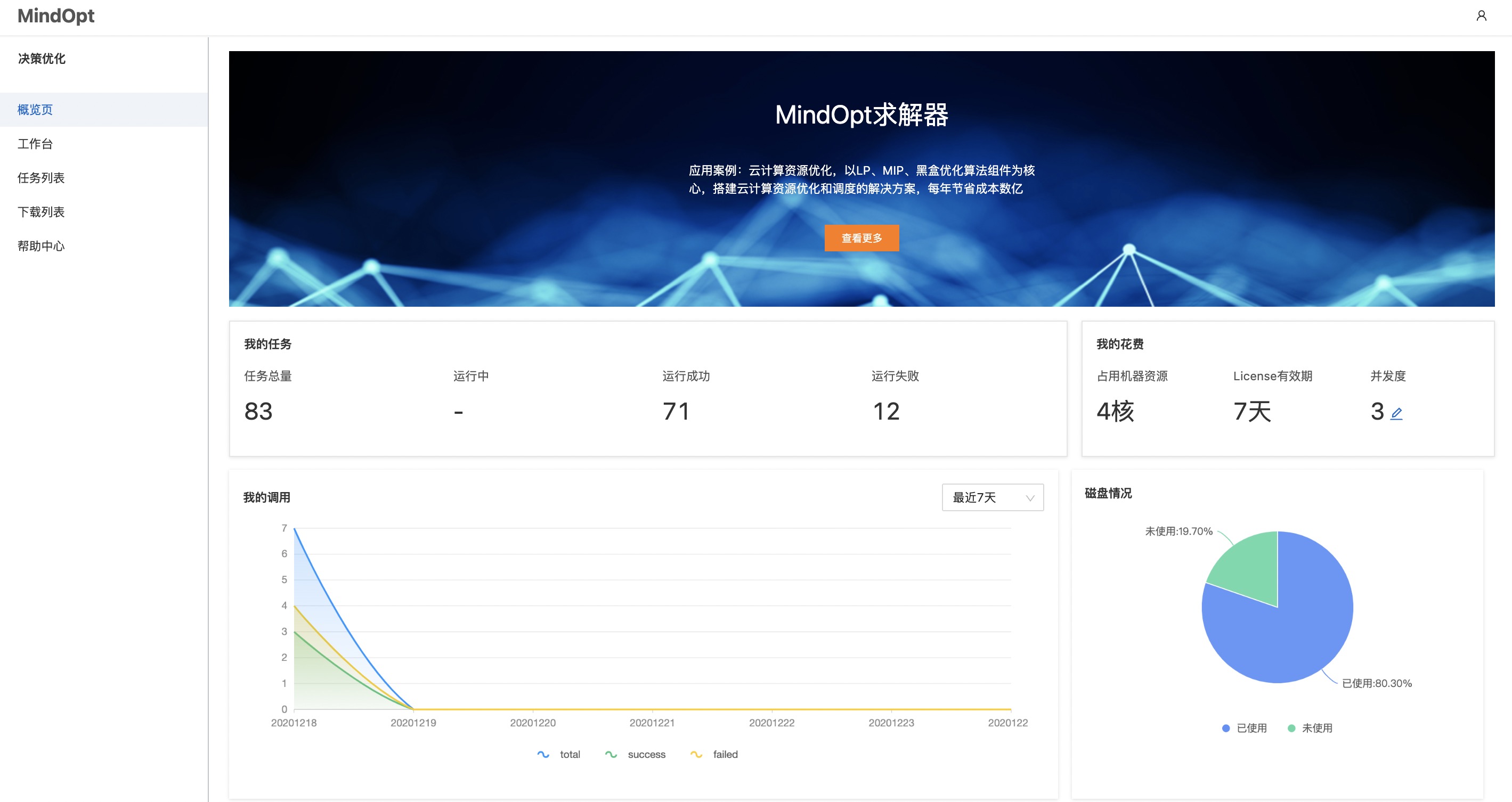

7.9.1. Access and logon¶

Enter the address in the browser, such as:

Local installation address: 127.0.0.1

Remote installation address: xx.xx.xx.xx

If the computing service is correctly installed, the following page will appear:

Enter the account and password:

Default account: admin

Default password: admin

After successful logon, the welcome page appears:

7.9.2. Workbench¶

Use the token

Folders help you manage problems from different sources. When you submit problems by using the client SDK, the job data with the same token is recorded in the same folder to facilitate subsequent data viewing, management, and download.

The following example shows how to use a token in Python:

model.set_str_param("Remote/Token", "xxxxxxxxxtokenxxxxxxxx")

You can create folders to manage the storage structure of jobs. Select a directory and create a folder under this directory.

Other features

Other ways of submitting jobs will be provided in this module later.

7.9.3. Job list¶

All jobs received by the compute server are displayed in this list. You can clear jobs when the job data occupies a high proportion of the disk space. The job list provides a full-list view and a folder view. You can view the logs and data statistics, and download the model, result, and logs of each job separately. In the folder view, you can view the statistics of all jobs under the folder, and package and download the entire folder.

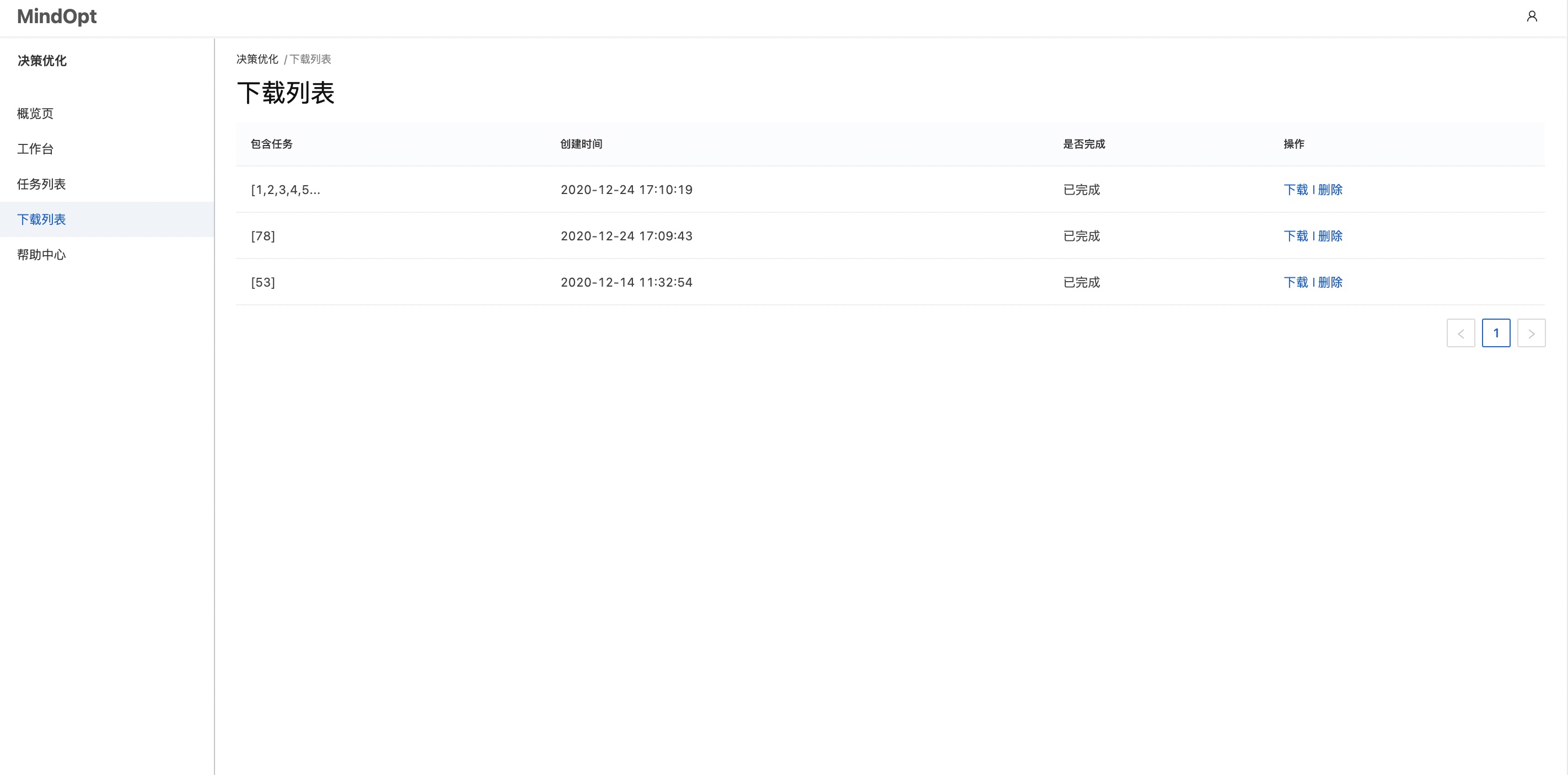

7.9.4. Downloads list¶

This list records the download jobs created in the job list.

To download a large amount of data, we recommend that you create a download job. This way, the data is packaged in the backend and then you can quickly download the data on the downloads list page.